TechScape: Why Can’t Crypto Eradicate Its Bugs? | Technology

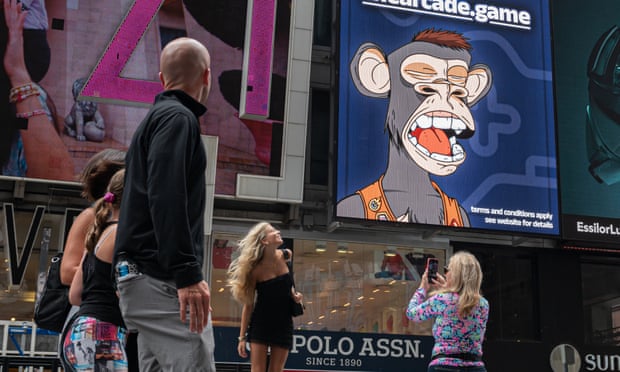

In February, Twitter user Brodan, an engineer at Giphy, noticed something strange about the Bored Ape Yatch Club (BAYC), the premier ape-based non-fungible token pool. A record intended to cryptographically prove the trustworthiness of the chained monkeys contained 31 identical entries, a situation that was supposed to be impossible. “There’s something super fishy about some of your monkeys,” Brodan wrote.

Six months later, when the Garbage Day newsletter gained more attention, Brodan’s questions had still not been answered. The situation is all too common in the crypto industry and the wider open source community, and raises the question of whether there is something fundamentally wrong with the idea that a bunch of amateurs can effectively hold large projects accountable.

The problem lies in an obscure record called “provenance hash”. This is a record published by BAYC’s creators Yuga Labs, which is intended to prove that there was no monkey business (sorry) in the first assignment of the monkeys. The problem the team had to solve is that some monkeys are rarer – and more valuable – than others. But in the initial “mint” they were allocated randomly across the first 10,000 to apply. To prove that they were distributed randomly, rather than a valuable few distributed to insiders, they published a provenance hash: a list of cryptographically generated signatures for each of the 10,000 monkeys, showing that the monkeys were pre-generated and pre-assigned, without revealing what their characteristics were.

So far so good, except that 31 of those signatures were identical. Since the 31 monkeys they were assigned were different, it means that the provenance record for these monkeys was broken – and they could theoretically have been changed to match the wishes of the person who bought them.

Earlier this summer, I asked Yuga Labs about the duplicates, and the company first pointed to the circumstances under which it hadn’t pulled a quick one: none of the 31 monkeys had gone to someone with insider connections, nor had they been generated with particularly desirable traits. Which is true – but also unsatisfying. If you learned that your burglar alarm had never been connected by the company that installed it, “has nothing been lost?” would only be a partial answer.

When pressed, the company investigated the issue further, and found the cause of the problem: when it prepared the provenance hashes, it triggered a rate-limiting error from the server storing the images of the monkeys. This error meant that, 31 times, instead of generating a cryptographic signature of an image of a monkey, the company unwittingly generated a cryptographic signature of the error message “429 Too Many Requests”. Oops.

I asked Yuga Labs co-founder Kerem Atalay, who works under the handle Emperor Tomato Ketchup, if he felt the multi-year gap between the problem and the solution undermined the rationale for provenance hash. If no one checks these things, what’s the point? “I think in this case maybe the reason it went unnoticed for so long is that this is such a heavily scrutinized project to begin with,” Atalay said. “The provenance hash became a minor feature of this entire project the moment it exploded. If a single pixel had changed in the entire collection after that point, it would have been extremely obvious.”

In that narrative, provenance hashes are useful for rebutting accusations of favoritism—but if there are no accusations, it’s not surprising that no one checks the hash. Yuga Labs made a similar defense of another year-long oversight, discovered a few months ago: the company had retained control over the ability to create new monkeys whenever it wanted, despite vowing to destroy it. Unlike the provenance hash, this ability was noticed quickly: in June 2021, Yuga Labs said they would fix the oversight “in the next day or two”.

Actual, it took over a year. “Although we intended to do this for a long time, we had not been careful,” Atalay tweeted. “Felt comfortable doing it now. Finished.”

Such problems are by no means limited to Yuga Labs, or the crypto sector at large. Last week, Google’s cybersecurity team, Project Zero, announced the discovery of a new security vulnerability in Android. Well, it was new to them: the exploit had already been used by hackers “since at least November 2020”. But the root cause of the bug was even older, and was reported to the open source development team in August 2016 – and a proposed fix was rejected a month later.

There are years of meaningful security vulnerabilities for nearly every Android phone on the market, despite the issue being visible in the public record for all to see.

It is unclear how long this vulnerability had been present in the code, but in other situations that time could be the source of major problems. In April, a bug was discovered in a command-line tool called Curl that had been present for 20 years.

And last December, a weakness was discovered in a logging tool called Log4j, which was, according to the National Cyber Security Center, “potentially the most serious computer vulnerability in years”. The flaw was hardly complex, and an attacker would hardly have needed to try before potentially taking control of “millions of computers worldwide”. But it had been sitting, undetected, in the source software for eight years. This check was not only embarrassing for people who believed in the security model of open source software, but also disastrous: it meant that affected versions of the software were everywhere, and the ongoing cleanup process might never be completed.

Small mistakes, big problems

Open source software like Log4j underpins much of the modern world. But over time, the basic assumptions in the model have begun to show their weaknesses. A small piece of software, used and reused by thousands of programs to end up installed on millions of computers, should have all the eyes of the world searching for problems. Instead, it seems that the more ubiquitous and functional software is, the more people are willing to trust it without checking. (It is, as always, a relevant cartoon from the webcomic XKCD).

In a perverse way, crypto has solved some of these problems, by placing a tangible financial benefit on finding bugs. The idea of a “bug bounty” is nothing new: a major software developer like Apple or Microsoft will pay people who report security vulnerabilities. The idea is to provide an incentive to report a bug, rather than build malware that exploits it, and to fund the kind of crowdsourced research that open source software is supposed to encourage.

With crypto projects, there’s actually a built-in bug bounty that runs 24/7 from the moment they’re turned on: if you’re the smart person who finds a bug in the right crypto project, your bug bounty could be… all the money that project holds. So when hackers from North Korea found a hole in the video game Axie Infinity, they got away with more than half a billion dollars. The downside of such an approach is, of course, that even if errors are detected quickly, the project tends not to survive the experience.

For Yuga Labs, the saving grace is that the only people who could abuse the oversights were Yuga Labs employees themselves, who were quickly seen as trustworthy enough not to worry about. But investors in the wider crypto ecosystem would do well to be wary: even if some say they’ve published proof that they’re trustworthy, experience shows there’s no reason to believe anyone has checked.

If you would like to read the full version of the newsletter, please subscribe to receive TechScape in your inbox every Wednesday.